Computer Vision Projects

This project covers several topics and concepts of the computer vision algorithm, including spatial correspondence, filter banks, clustering, spatial pyramids, Lucas-Kanade Alignment, RANSAC, Homographies, Epipolar geometry, and triangulation. The full code and report can be found in the github repo.

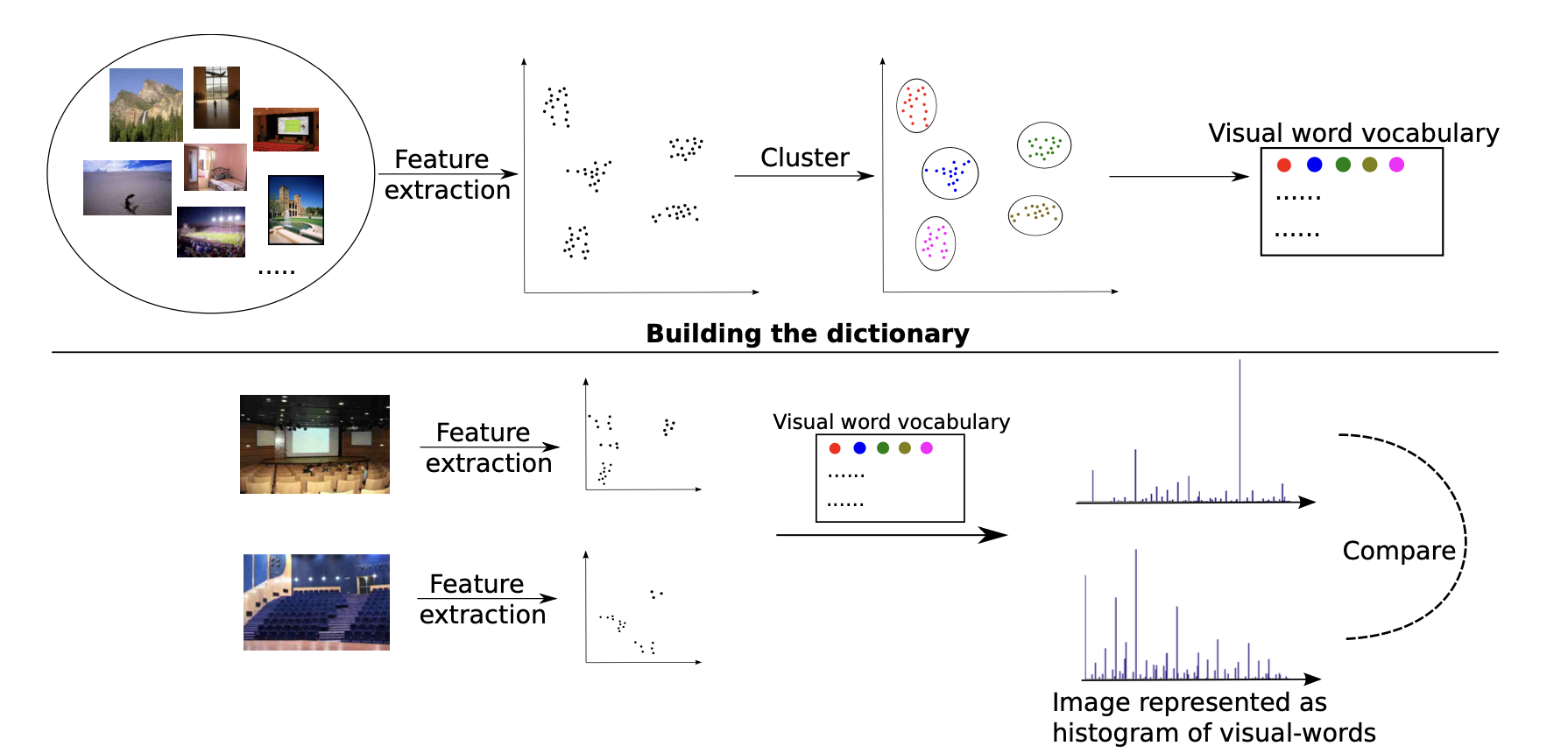

Scene Recognition using Spatial Pyramid Bag-of-Words Models Given an image, can a computer program determine where it was taken? The first sub-project built a representation based on bags of visual words and used spatial pyramid matching to classify the scene categories.

First, given a training set of images, visual features are extracted using filter responses from a predefined filter bank. Next, visual words—or a “dictionary”—are constructed by identifying the cluster centers of these visual features. To classify new images, each one is represented as a vector of visual words. New images are then compared to the existing ones within this visual-word vector space, where the nearest match determines the label.

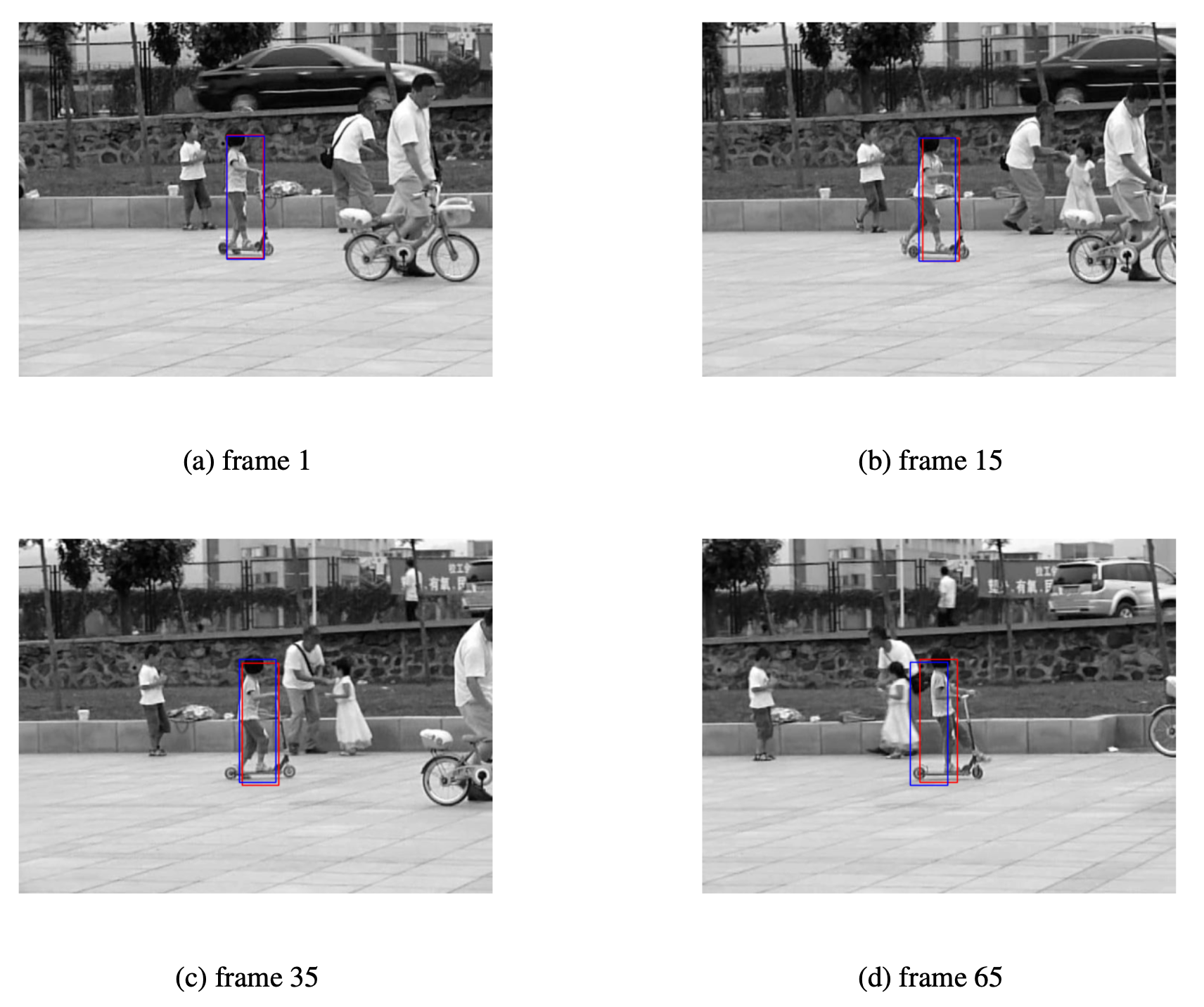

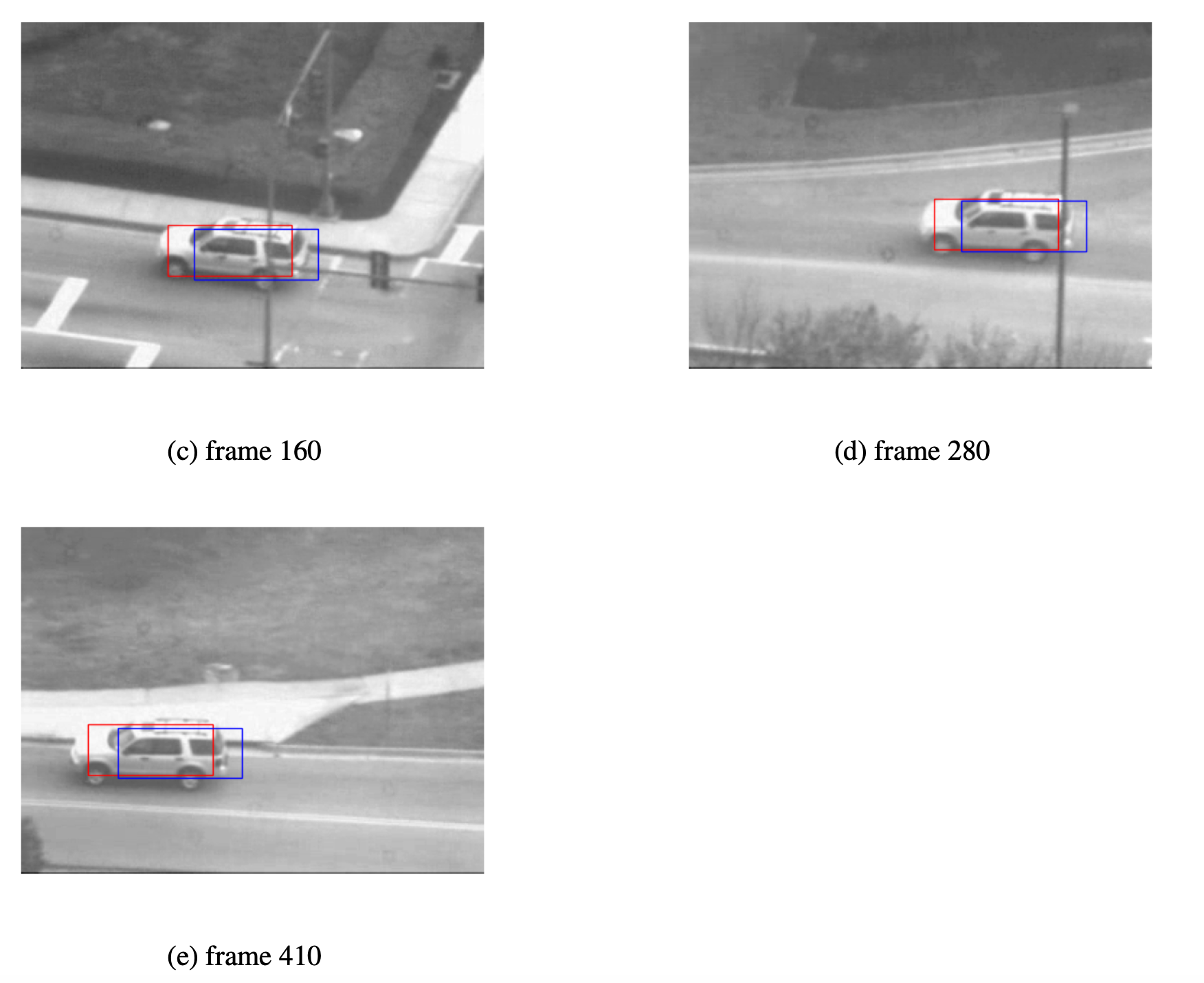

Lucas-Kanade Alignment For this sub-project, a well-known vision-based technique for tracking features across image sequences, Lucas-Kanade (LK) is implemented. It leverages optical flow to estimate pixel motion between successive images. This project delves into the fundamental aspects of implementing the LK tracking for pure translation with a single template. Two methods for performing template updates will be explored: a naive update, and an update with drift correction. The tacker will also be modified to account for Affine motion and then implement a motion subtraction method to track moving pixels on a scene.

Augmented Reality with Planar Homographies In this sub-project, an AR application will be implemented using planar homographies. First, feature extraction is performed by using FAST or BRIEF descriptors, which try to summarize the content of the image around the feature points in as succinct yet descriptive manner as possible. After finding point correspondences between two images, the homography between them will be estimated. Using this homography, one can warp images and finally implement the AR applications.

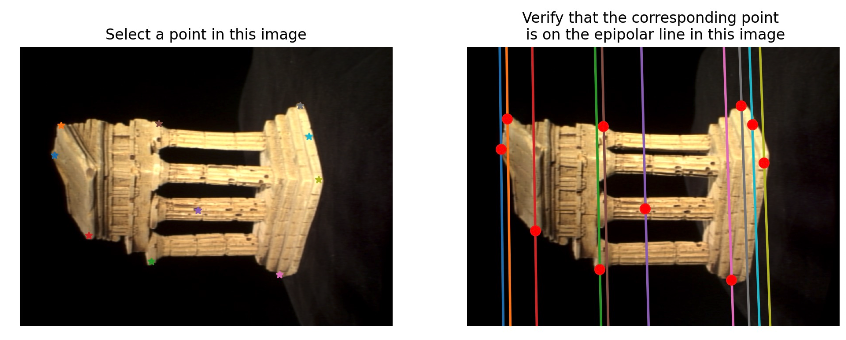

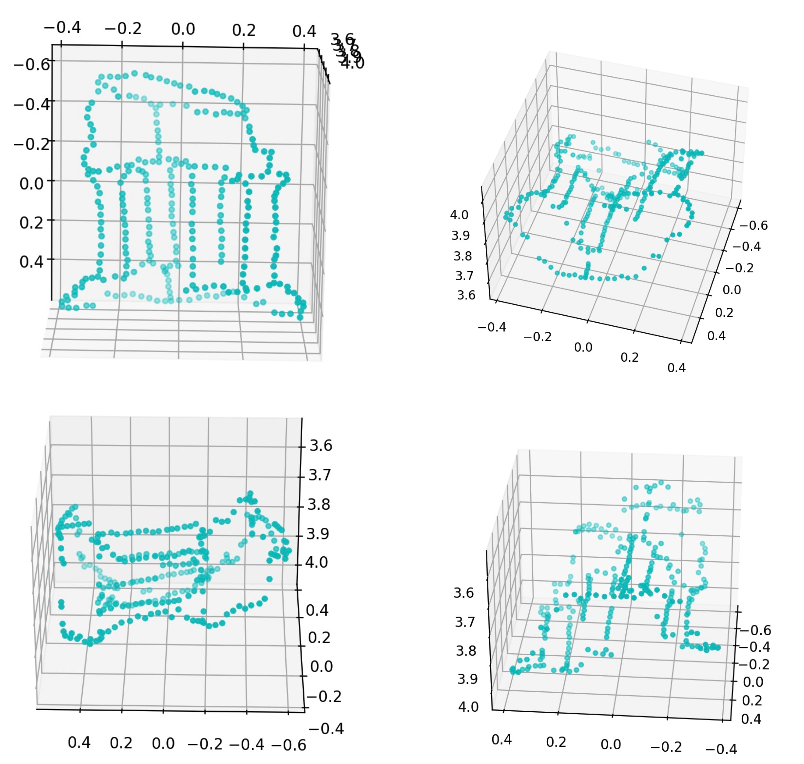

3D Reconstruction This project implements the 8-point algorithm and triangulation to find and visualize 3D locations of corresponding image points to reconstruct a 3D point cloud from a pair of images taken at different angles.